1. Introduction

In narrative theory events are conceived of as the constituents of narratives, i.e. the source ingredient from which narratives are built. Events are therefore considered the smallest units of narrations. Accordingly, models for the so called ‘narrative constitution’ explain the genesis of a narrative based on events. These models describe how events are turned into the text of a narration with a series of (idealized) processes such as permutation and linearization. In this contribution, we discuss the possibility to represent plot on the base of events. Our computational narratology approach to event annotation has already been automated (cf. Vauth et al. 2021)1 as well as adapted by Chihaia (2021) for the analysis of the representation of the Mexican State of Sinaloa in newspaper reports. Here, we elaborate on the theoretical background of our operationalization and optimize our parametrization for future applications for text analysis. We consider this to be a strongly discourse-based addition to the recent important outline of Natural Language Processing (NLP) approaches for narrative theory by Piper et al. (2021).

At the center of our efforts is the operationalization of the event concept in narrative theory. We aim at implementing it for large scale text analysis by building a step by step procedure from the determination of events in narrative texts to their subsequent application for the analysis of narrativity and plot. The presented work involves two separate, but connected steps: First, we outline the concept of events, and the possibility of modeling plot based on events against the background of narratological assumptions and then operationalize events and narrativity. This results in the convertibility of the annotations of these narrative micro phenomena to narrativity graphs that encompass whole texts. Second, we present procedures for optimizing this approach, again by relying on narratological assumptions, especially about narrativity, tellability and plot. We consider narrativity as a property of events and event chains. Tellability which is a narratological concept used to assess the degree of narrativity of a text passage, we quantify via text summaries as reception testimonies and thus transform narrativity structures into plot representations. In doing so, we model plot as defined by i) the degree of narrativity of events and their representation as graphs over the text course and ii) as the most tellable event sequences of a narration.

Our focus on the representation of eventfulness in the events and thus on the discourse level of narrations differs from many current approaches tackling events, narrativity or plot in one of these two regards: While many approaches model plot or narrativity by approximation via other variables (such as sentiment as in Jockers (2015) or function and “cognitive” words in Boyd et al. (2020)) we address narrativity as a feature of representation, and thus address it directly, and build our operationalization of plot on top of that. Secondly, we do not rely on readers’ inferences in a first place or for evaluation purposes, but instead start with textual properties and only use reader based information in a subsequent step for optimizing the approach.

2. Modeling Plot by Narrativity of Events

2.1 Events as Basic Units of Narrative

In narratology, an event is typically described as “a change of state”. Moreover, events are considered “the smallest indivisible unit of plot construction” (cf. Lotman 1977, p. 232) and “one of the constitutive features of narrativity” (Hühn 2013, p. 1). The way events are organized into narratives is commonly described in models of narrative constitution relating the fictional world (i.e., the what of narration) to its representation in the text (i.e., the how of narration). These two levels of narratives have been introduced in the 1920s by Tomaševskij and other formalists. Since then, they have been addressed in a variety of – partly even contradictory – terms: among the most prominent ones are fabula/sjužet (Tomasevskij 1925), histoire/recit (Genette 1980), and story/text (Rimmon-Kenan 1983).2 Whereas these terms refer to models of narrative constitution with two levels, some of the models of narrative constitution define even further differentiate the histoire (what) or the discours (how). For example, Stierle (1973) and Bal (1985) both introduce three levels and Schmid (2008) proposes even four levels of narrative constitution. Regardless of the number of levels assumed and their specific conception, all models of narrative constitution are – at least implicitly – based on events. Therefore, events can be seen as a core element in narrative.

Nevertheless, up to date only very few approaches in computational literary studies have addressed the narratological understanding of events in an adequate manner. This is probably due to their granularity and ubiquity as well as the conceptual challenges connected to events. Since events in narratology are seen as a sort of atoms of narrative, they are difficult to tackle both pragmatically and conceptually. Pragmatically, event analysis is a question of resources: Analyzing events means identifying and classifying a presumably large number of segments in possibly many narratives in order to be able to make qualified statements about events. While the concrete labor connected to such a manual analysis would be less of an issue for automated approaches, there the conceptual fuzziness is a so far unsolved problem. Therefore, also approaches for automated event recognition are still little developed in computational literary studies (and probably also beyond). An exception are Sims et al. (2019) and the implementation in Bamman (2021). In their overview Sims et al. (2019, p. 3624) point out that event detection in literary texts so far focuses on characters and their relations or on the modeling of plot through sentiment. In NLP of non-literary texts, by contrast, there is long tradition of analyzing events based on an understanding of events that is not or only partly related to a narratological understanding. These NLP approaches are focused on extracting events according to semantic categories (e.g., the automatic content extraction task, cf. Doddington et al. 2004, Walker et al. 2006) or identifying possibly relevant events from texts, whereas in our view a narratology-based approach should include aspects beyond that. Especially the belonging of events to both histoire and discours results in the conceptual challenge that should be tackled for a wider engagement with events in computational narratology.

It is exactly the relation to histoire and discours that led the Hamburg Narratology Group to distinguish events with regard to their features and functions in event I and event II. As Hühn (2013, par. 1) elaborates, event I is any change of state and thus a general type of event without further requirements, whereas event II is an event that needs to satisfy certain additional conditions. While the presence of an event I can be determined by its – explicit or implicit – representation in a text, an event II has additional features that need to be determined with “an interpretive, context-dependent decision”. These features differ in detail but they are typically related to qualities such as relevance, unexpectedness or other kinds of unusualness of the event in question (cf. Table 1 for features of events).

Features of events in narrative theory.

| state (s) | process in time | change of state | physical | mental | anthropomorphic agent | intentional | unexpected | ||

| event I features | additional features for event II | ||||||||

| Prince (2010) | Stative Event | x | |||||||

| Active Event | x | x | (x) | ||||||

| Ryan (1986) | Change of physical state | x | x | x | x | (x) | (x) | ||

| Mental act | x | x | x | x | x | ||||

| Ryan (1991) | Happening | x | x | x | |||||

| Action | x | x | x | x | x | ||||

| Martínez and Scheffel (2016) | Happening | x | x | x | |||||

| Action | x | x | x | x | x | ||||

| Lahn and Meister (2013) | Happening | x | x | x | |||||

| Event | x | x | x | x | |||||

| Schmid (2008) | Change of state | x | x | x | |||||

| Event | x | x | x | x | x | (x) | |||

The differentiation between event I and II also is connected to two distinct definitions of narrativity: “The two types of event correspond to broad and narrow definitions of narrativity, respectively: narration as the relation of changes of any kind, and narration as the representation of changes with certain qualities” (cf. Hühn 2013, par. 1). The latter goes back to Aristotle’s characterization of plot of tragedies by a decisive turning point and is also present in Goethe’s conception of the novella as based on an unheard-of occurrence (“unerhörte Begebenheit”). The description of event II narrativity as “the representation of changes” also highlights the representational character of events.

2.2 From the Representation of Events to Narrativity – and Plot

As we have pointed out, in narrative theory, events are not only considered constituents of narratives, but they are also connected to their narrativity. Additionally, they are connected to representationality. Our event-based approach to modeling plot builds on these aspects, i.e., the constituency of events, their relation to narrativity and their representational character in texts. For this, we focus on the discourse level, or the how of narrations and not, as most approaches do, on the story level, or what of narrations. From a narrative theory perspective, with this we tackle an important aspect of plot that is typically overlooked, even though it is implicitly and explicitly addressed in the definition of plot:

The term “plot” designates the ways in which the events and characters’ actions in a story are arranged and how this arrangement in turn facilitates identification of their motivations and consequences. These causal and temporal patterns can be foregrounded by the narrative discourse itself or inferred by readers. Plot therefore lies between the events of a narrative on the level of story and their presentation on the level of discourse. (Kukkonen 2014, par. 1)

The foregrounding of the narrative discourse and the representation of events on the discourse level described by Kukkonen (2014) is what we try to tackle with our approach. Besides the goal to complement the approaches that focus on story aspects and thus provide a theoretically more comprehensive understanding of plot, it is also a decision driven by pragmatic reasons. While there is a variety of more or less structured conceptions of how narratives are build out of defined (story-related) elements, none of these provide an understanding of narrative construction that can be operationalized for possibly general purposes. Not only is Propp’s Morphology of the Folktale focused on the very specific area of (Russian) folktales. Also supposedly general approaches like Greimas’ actantial model, Bremond’s narrative roles or Pavel’s move grammar are based on rather schematic assumptions about narrations. If implemented more generally, a considerable amount of interpretatory work is necessary in order to detect features qualifying as general structural elements of narratives, just as for Levi-Strauss’ structuralist theory of mythology and the identification of ‘kinship’. Even though there might be a way to make these – in the widest sense: structuralist – approaches applicable, their operationalization is certainly not a straightforward task. For being able to apply any of these concepts of narrative construction in an automated and comprehensive event-based approach to plot, first a clearer idea on how events are combined into narratives would have to be developed on their basis.

Therefore we consider it not yet feasible to generally address plot by building on story world related features. Instead, we focus on the easier to grasp representational aspect of events in narratives. Models of narrative constitution like the one by Schmid (2008) describe the way events are turned into their representation in the narrative texts. As Schmid points out, within the narrative constitution model it is these very texts that are the only accessible level and from which the underlying levels belonging to the histoire of narrative need to be inferred. Since the analysis of textual phenomena is easier to implement than the analysis of underlying semantics, or even story world knowledge, we consider it reasonable to focus on the textual representation of events. Even more so, because the analysis of events is a starting point for further analyses. Thus the event analysis needs to be as solid as possible in order to reduce the perpetuating (or even multiplying) of errors in the subsequent steps. Therefore, there is a theoretical reason for designing our approach based on the textual representation of events. This takes into account narrative theory and its focus on textual representation of narratives and its interference with the narrated world or plot.

The core phenomenon here is narrativity, which is, as we will show, our approach to the modeling of plot. Narrativity is, again, a narrative theory term that is employed in a variety of senses. All notions can be described as concerning the “narrativeness” of narrative(s) (Abbott 2014) and they can be grouped by their usages into two understandings: Narrativity is either understood as a kind of essence of narratives or as a quality narratives have in comparison to other narratives (or within them). This means that narrativity can be understood as a general phenomenon of narrative (as distinct from argumentation, description, etc.), or as something that particular narratives display and that can be determined by comparing them to other narratives. Therefore, the narrative theory discussion about narrativity can be put, as Abbott (2014, par.5) says, “under four headings: (a) as inherent or extensional; (b) as scalar or intensional; (c) as variable according to narrative type; (d) as a mode among modes”. In other words, while (a) is concerned with the question of narrativity as such, the other three are more interested in discerning specific characteristics of narrativity within or between texts.

From an operationalization perspective, the latter are the more interesting notions since they can be used for classifying or clustering narrative texts. When implementing a scalar understanding of narrativity (b), one would typically be interested in the degree of narrativity of texts, whereas the classification of texts according to narrativity features (c) may be helpful for identifying subgroups of texts like genres, and an operationalization of narrativity as a mode (d) could look at the share of narrative passages in a text (or more texts). As we will discuss in the next section in more detail, our approach is based on the concept of narrativity as a scalar property. Moreover, it is designed as a heuristic both for approaches based on an understanding of narrative as (c), a variable, and (d), a mode.

While none of these narrativity conceptions addresses plot in a first place, there is a connection between events and narrativity described in narrative theory that opens up the possibility of modeling plot based on events. As already discussed, the two event types introduced by Hühn (2013, par. 5) are connected to specific understandings of narrativity. Events I clearly relate to Abbott’s concept of narrativity as (a) an inherent property of narrative texts since the mere fact that a text consists of events I makes this text a narrative texts. The connection between event types and narrativity concepts (b)–(d), on the other hand, can certainly be connected to the analysis of events II. It seems reasonable to infer from the quality and quantity of events II to narrative properties of texts and thus to use events II for the operationalization of narrativity. But also events I can be used for this, if operationalized in an adequate manner. This is important for our approach, because the building on events I enables us to focus on representational aspects and to ignore story related aspects as well as extratextual information that would be needed for event II analysis.

A prerequisite for building an understanding of narrativity as a property “integral to a particular type of narrative” (Hühn 2013, par. 5) without direct reference to events II is the identification of individual events I as especially relevant. Here, the concept of tellability provides a possibility to operationalize the way events are “foregrounded by the narrative discourse itself” (Kukkonen 2014, par. 1) and thus to relate events I to plot. Tellability, just like plot, is not only connected to story, but also to discourse:

Tellability [refers] to features that make a story worth telling, its ‘noteworthiness.’ […] The breaching of a canonical development tends to transform a mere incident into a tellable event, but the tellability of a story can also rely on purely contextual parameters (e.g., the newsworthiness of an event). […] Tellability may also be dependent on discourse features, i.e., on the way in which a sequence of incidents is rendered in a narrative”. (Baroni 2012, par. 1)

This possibility of defining tellability with regard to the very representation of a narrative enables us to focus on event I. This is an alternative to the concept of narrativity developed by Piper et al. (2021, p. 3) (“Someone tells someone somewhere that someone did something(s) [to someone] somewhere at some time for some reason”). While we consider their definition of narrativity helpful for furthering computational approaches, it entails the development of a series of approaches (to characters, time, place, action, representation mode, etc.) that need to be combined into one approach before being applicable as narrativity analysis. On the contrary, our approach is more straightforward to apply since it is directly based on the representation of events and their narrativity. On the long run, both approaches should be combined.

3. Operationalizing Events and Narrativity

3.1 Narratological Operationalization of Events

Our approach to the annotation of events conceives of events as “any change of state explicitly or implicitly represented in a text” and is therefore based on event I which is “the general type of event that has no special requirements” (Hühn 2013, par. 1). In our operationalization we further differentiate between event types in order to provide for narrativity analysis and we classify the events according to their representation.3

The differentiation of event types is based on the first three event criteria listed in Table 1, namely being a state, a process in time and a change of state. Being a state as well as being a process in time are typically considered prerequisites for changes of state. Since Prince also introduces the notion of a stative event (which is neither a process nor a change of state), we consider it sensible to use all three criteria and base three different event types on them: states, processes in time and changes of state. With this more fine-grained solution we can incorporate more theoretical positions in our event operationalization, such as the one by Prince (2010), as well as processes of speaking, thinking and movement which are often not considered event candidates. Moreover, we also provide a possibility to distinguish different levels of narrativity according to the three event types. Changes of states have the highest level of narrativity, processes in time have a lower and states the lowest narrativity. We additionally introduce non-events as a category for enabling the comprehensive annotation of texts.4

The annotation is guided by the explicit representation of these event types in finite verbs, i.e., the question whether the verb points to a state, a process or a change of state, or none of these in the fictional world. The annotation units are defined as minimal sentences including all words which can be assigned to a finite verb. Thus, there are no overlapping annotations. The determination of verbal phrases as annotation units and the finite verb as central also entails that the change of state needs to be expressed in a single verbal phrase.

The four event categories are determined as follows (examples are taken form Kafka’s Die Verwandlung (‘Metamorphosis’):

‘Changes of state’ are defined as physical or mental state changes of animate or inanimate entities as for example “Gregor Samsa one morning from uneasy dreams awoke”.

‘Process events’ cover actions and happenings not resulting in a change of state (e.g., processes of moving, talking, thinking, and feeling) as for example “found he himself in his bed into a monstrous insect-like creature transformed”.

‘Stative events’ refer to physical and mental states of animate or inanimate entities as for example “His room lay quietly between the four well-known walls”.

‘Non-events’ have no reference to facts in the story world and typically comprise questions or generic statements or counterfactual passages as for example “She would have closed the door to the apartment”.

With this operationalization, we implement a discourse based approach to events that includes the narrativity of events. From a narrative theory perspective, our approach connects events to plot by basing the event identification on narrativity. Moreover, we focus on the discourse level and most importantly, we annotate neither linguistically nor do we make assumptions about facts in the narrated world beyond the facts represented in the event in question. It is rather the representation of the story and thus the representation of eventfulness in discourse that is being tackled by this approach. This becomes obvious when looking at one of the examples above: While “found he himself in his bed into a monstrous insect-like creature transformed” relates certainly to the most impactful change of state of the whole Metamorphosis (i.e., the metamorphosis of Gregor Samsa into an insect), it is here only represented as a process of perception (of a change of state). This example illustrates our focus on event representation and an important advantage of this approach: We avoid the relatively strong interpretations necessary when primary relating to the story world ‘behind’ its representation in the narrative. With our event type annotations, we do not want to decide whether Gregor’s physical transformation is a fact in the narrated world, but stick to its representation as perception (and the decisive ambiguity of the beginning of his novella).

Additionally to this discourse orientation, our approach to annotate the whole text implements the narrative theory understanding of events as basic elements of narratives. Therefore, our approach is suitable for testing the assumptions of narrative theory with regard to their applicability. From a quantification perspective, our conception of events as scalar with regard to their narrativity, together with the complete annotation of texts, enables us to further compute our event annotations.

3.2 Representing Plot as Narrativity Graphs

The annotations of event types are used to model the narrativity of a text as timelines and by that to model its plot. To do this, we use a scaling of the narrativity of our event types and a smoothing procedure. Scaling and smoothing are also used to optimize the plot modeling in Section 4. Before discussing the optimization, we present both operationalization steps briefly.

3.2.1 Narrativity Values

As already discussed above, we implement a scalar notion of narrativity. This is realized by assigning each of the four event types a narrativity value. In doing so, every annotation and by that every text span gets a narrativity value. Beyond implementing the underlying theoretical assumptions about the narrativity of the event types, this also allows to compute the event annotations. From a statistical perspective, categories should only be transposed into numbers if that can be done in a meaningful way. In our case, we have an obvious ranking of categories. The rank starts with no narrativity for non events and extends to highest narrativity for changes of state. Since the determination of an absolute value of the event categories is a bit less obvious, we used predefined narrativity values for a first exploration:

Non events: narrativity value 0

Stative events: narrativity value 2

Process events: narrativity value 5

Changes of states: narrativity value 7

These values represent not only our intuition about the relevance of the event types for a text’s narrativity, but are also oriented to the discussion about description and narration as text modes with different narrativity (Herman 2005). Nevertheless, it is an open question if these values are appropriate.

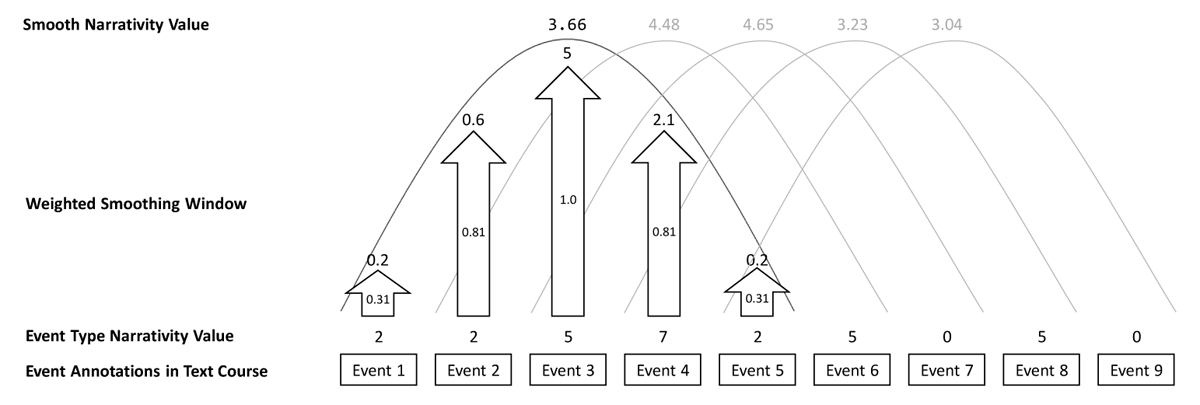

3.2.2 Smoothing

The concepts of narrativity in literary studies do not describe micro phenomena on word or sentence level, but rather larger text passages in the size of a couple of paragraphs and beyond. Due to that, we use a cosine weighted smoothing approach to model the narrativity of longer text passages. With this, the smoothing process generates narrativity values that can be used to draw an interpretable timeline graph, representing the narrativity in the text’s course or, to use the terminology of narratology, in narration time. Figure 1 shows how we compute a smooth narrativity value for each event. Due to the cosine weighting, for the computation of the smoothed narrativity values the unsmoothed narrativity values (event type narrativity values) of the events in the outer parts of the smoothing window are included to a lesser extent. In doing so, we assume that context influences the narrativity of a text passage, but that this influence diminishes the further away the contextual events are.

As for the scaling, the size of the smoothing window is an operationalization decision that can be used for optimization. For our exploration we set the value of the window to 100, assuming that passages of 100 events (i.e., 100 verb phrases) are a reasonable size with regard to narrativity.

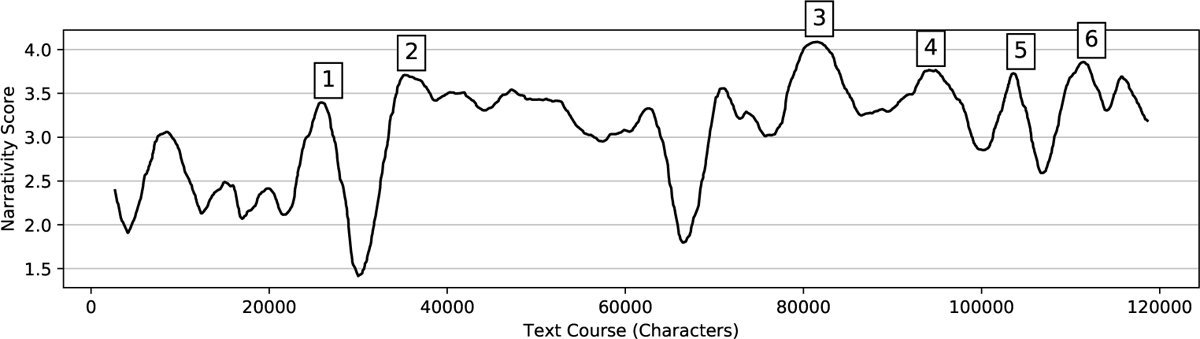

3.2.3 Evaluation by Exploration

In Vauth et al. (2021), we evaluated our narrativity timeline graphs by simple exploration of the graph’s peaks. As discussed above, the values of the event types were set to 0, 2, 5, and 7, and the smoothing window covers 100 events. Figure 2 shows that the highest peaks of the timeline representing the narrativity in Franz Kafka’s Metamorphosis are located in text passages where we find actions that are somehow related to the event II concept. At least, it would be reasonable to say that these passages are central for the development of the plot. Therefore, this explorative evaluation indicates that narrativity graphs have the potential to model a narrative’s plot as a timeline and can detect eventful text parts.

Peaks in Franz Kafka’s The Metamorphosis as an evaluation by exploration (Vauth et al. 2021). The annotated peaks are:

After the metamorphosis, Gregor exposes himself for the first time to his family and colleague.

Gregor leaves his room, his mother loses consciousness, the colleague flees and his father forces him back into his room.

Gregor’s father throws apples at him. Gregor gets seriously wounded. Escalation of the father-son conflict.

Three tenants move into the family’s flat.

Gregor shows himself to the tenants, who then flee.

Gregor dies.

4. Optimizing Narrativity for Plot Representation

Our main idea for optimizing the narrativity graphs in their capability to model plot is a quantitative comparison to the text passages that are mentioned in summaries of the annotated texts. With this, we can improve our graphs as plot representations which represent the degree of narrativity of events over the text course and highlight the most tellable event sequences of a narration.

This approach is based on the assumption in narrative theory that narrativity and tellability are strongly related. At the same time, we use this procedure to test whether our approach of modeling narrativity on the basis of event representation is suitable also from a story-related notion of narrativity and can thus be considered a comprehensive approach. This is possible because the summaries refer primarily to the level of histoire and do not consider the mode of representation.

For optimization, we used four manually annotated German prose texts: Das Erdbeben in Chili (1807) by Heinrich von Kleist, Die Judenbuche (1842) by Annette von Droste-Hülshoff, Krambambuli (1884) by Marie von Ebner-Eschenbach and the already mentioned Die Verwandlung (1916) by Franz Kafka.

4.1 Preliminaries

4.1.1 Creating Summaries as Resources

Our first approach was to base our optimization on summaries by expert readers. For this purpose, we collected summaries written by literary scholars and published in the Kindler Literatur Lexikon (Arnold 2020), the most known encyclopedia for German literature. However, when reviewing these summaries, it became apparent that a high proportion of them consisted of interpretative passages and comments on the text, and the very summary of the texts was only a small part that also varied in its realization. Because of that, these expert summaries were inappropriate for our purpose to model plot.

As a second attempt, we collected summaries of our manual annotated stories from Wikipedia. These summaries turned out to be more focused on the text’s plot. Still, we noticed that some of these summaries seemed to place an arbitrary emphasis on the summarized parts of the stories. Therefore, it is not reasonable to assume that these summaries only focus on the most tellable happenings.

To compensate for the randomness of a single text summary, we finally had students write summaries for four of our manually annotated texts. The work assignment was:

Read the selected primary text.

Write a summary as simple as possible.

Summarize the main events of the text from your point of view.

Do not use any aids but the narrative itself.

Do not write more than 20 sentences.

By that we received between 9 and 11 independent summaries for each of our four texts. Based on these we could now evaluate which passages of the stories have been mentioned frequently, also assuming that those passages that have been mentioned by more readers display a higher degree of tellability.

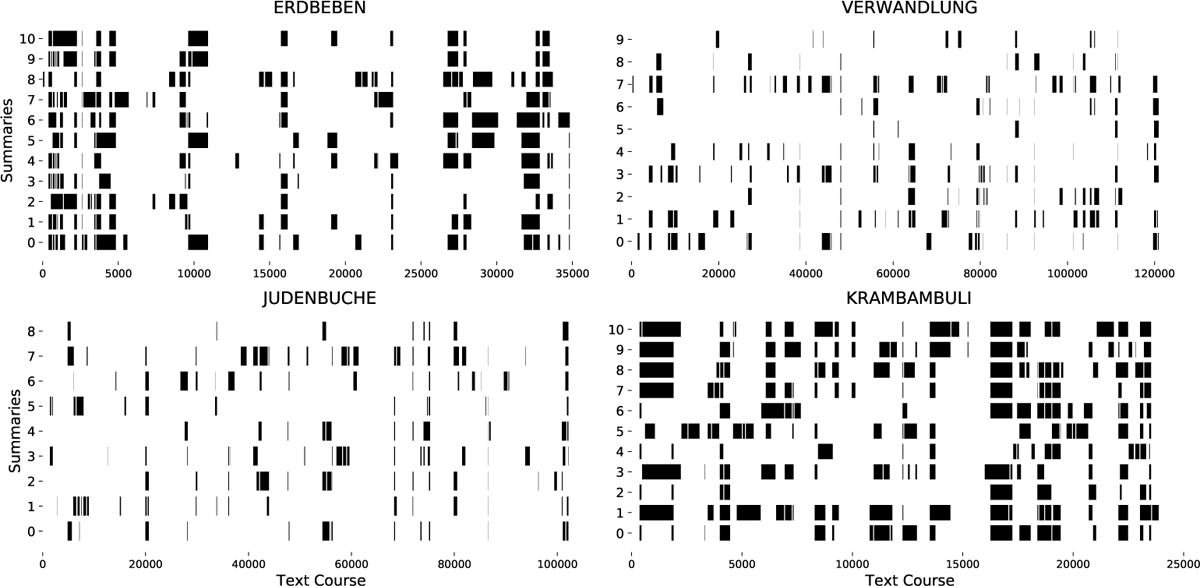

4.1.2 Summary-based Text Annotation

To measure the frequency and by that the tellability of text passages, for each sentence of a summary we manually annotated the text spans it referred to. For this, only the sentences that refer to clearly identifiable happenings were taken into account. For example, a sentence like “Kafka’s Metamorphosis tells the story of Gregor’s expulsion from the civilized world” is a too general summary, while the reference of a sentence like “Gregor is wounded by his father” can be located in the narrative without any problems.

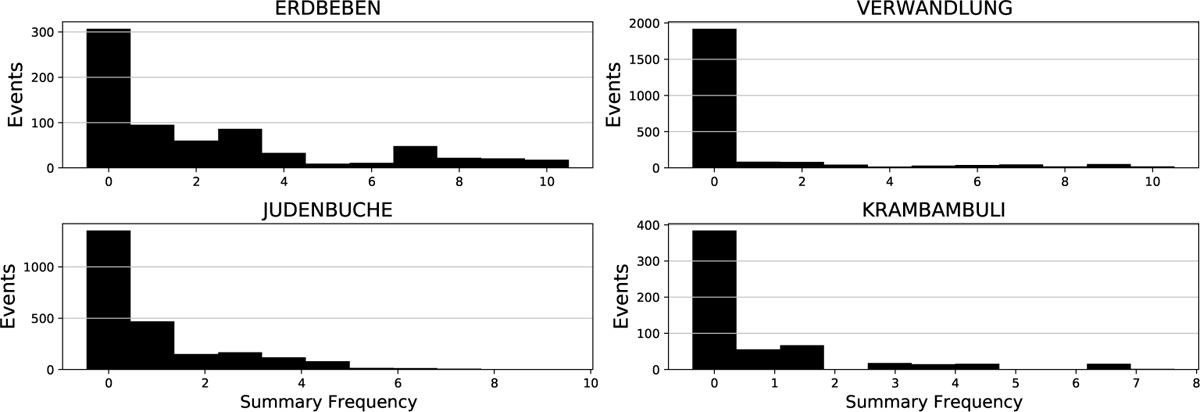

Figure 3 shows the annotations of summaries for the four summarized texts. The shortest of these texts, Krambambuli, has a length of about 25,000 characters, while the longest, Die Verwandlung, has a length about 120,000 characters. This has an impact on the summaries and their usage for our optimization task. As Figure 3 shows, many summaries of the two shorter texts, Erdbeben and Krambambuli, refer to relatively large parts of the narratives. In the shorter texts, however, the multiple mention of an event is not a strong indication that these are particularly tell-worthy passages. It is simply caused by the fact that large parts of the texts are mentioned by all summaries. For the optimization of the narrativity graphs, however, it is important that the summaries are as selective as possible, because in the next step we will determine how many summaries refer to the same events.

4.1.3 Optimization Method

Our optimization approach is based on the comparison of the event-based narrativity graphs that we presented at the end of the last section with the tellability scores of individual text passages quantified based on the summaries. For this purpose, we determined for each event annotation (subsection 3.1), in addition to the smoothed narrativity value, in how many summaries the event is mentioned. This resulted in a tellability value for events defined by the number of summaries in which the event in question is mentioned. With this, narrativity and tellability values are available for the entire text, and their correlation can be tested. Assuming that tellability is connected to high narrativity, the goal of optimization is to find a setting that assigns passages with high tellability values a high narrativity value.

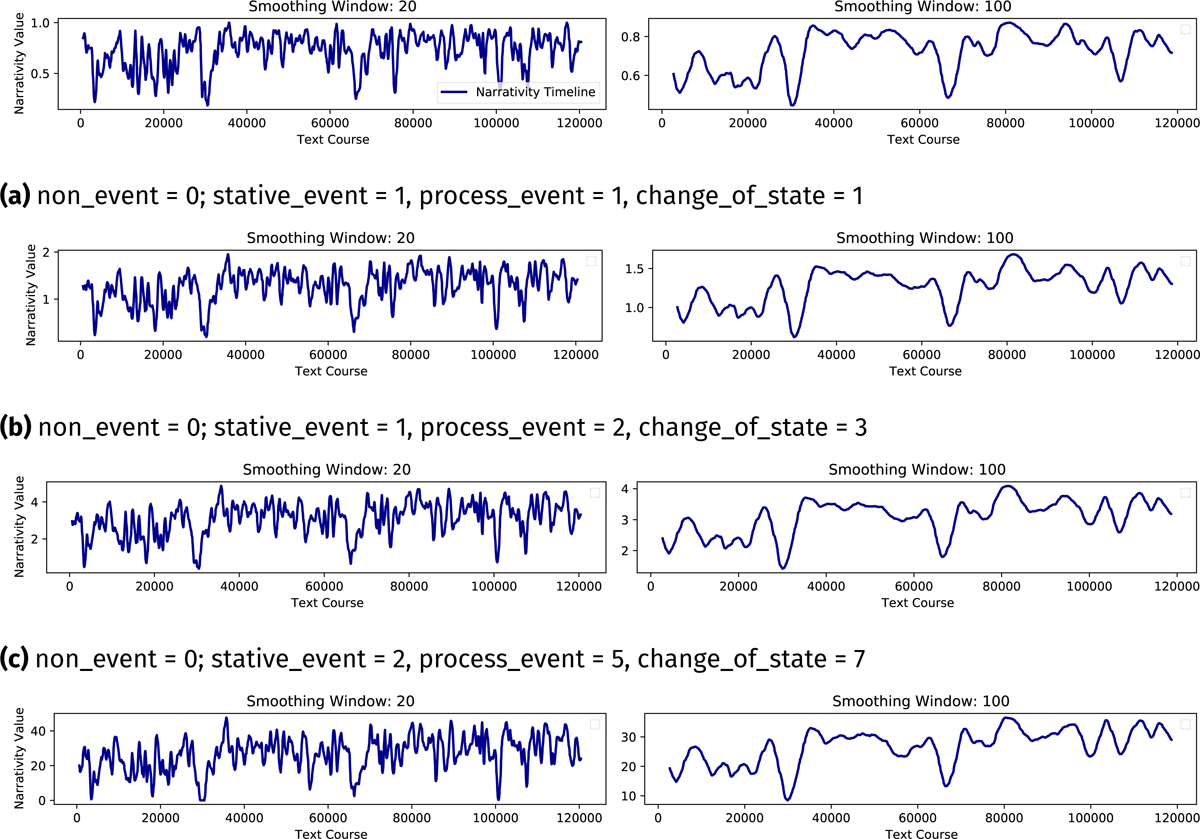

For finding such a setting that possibly raises the correlation of narrativity and tellability, we adjusted the narrativity scaling on the one hand and the size of the smoothing windows on the other hand. Figure 5 shows with two different smoothing windows and four different event type scales that the structure of the narrativity graphs is mainly affected by the settings for the size of the smoothing window. A comparison between the timelines in Figure 5a, 5b, 5c, and 5d, shows considerable differences from character 40,000 to 60,000 between the graphs on the left (smoothing window 20) and those on the right (smoothing window 100). In certain passages, also the change of narrativity scales influences the graph.

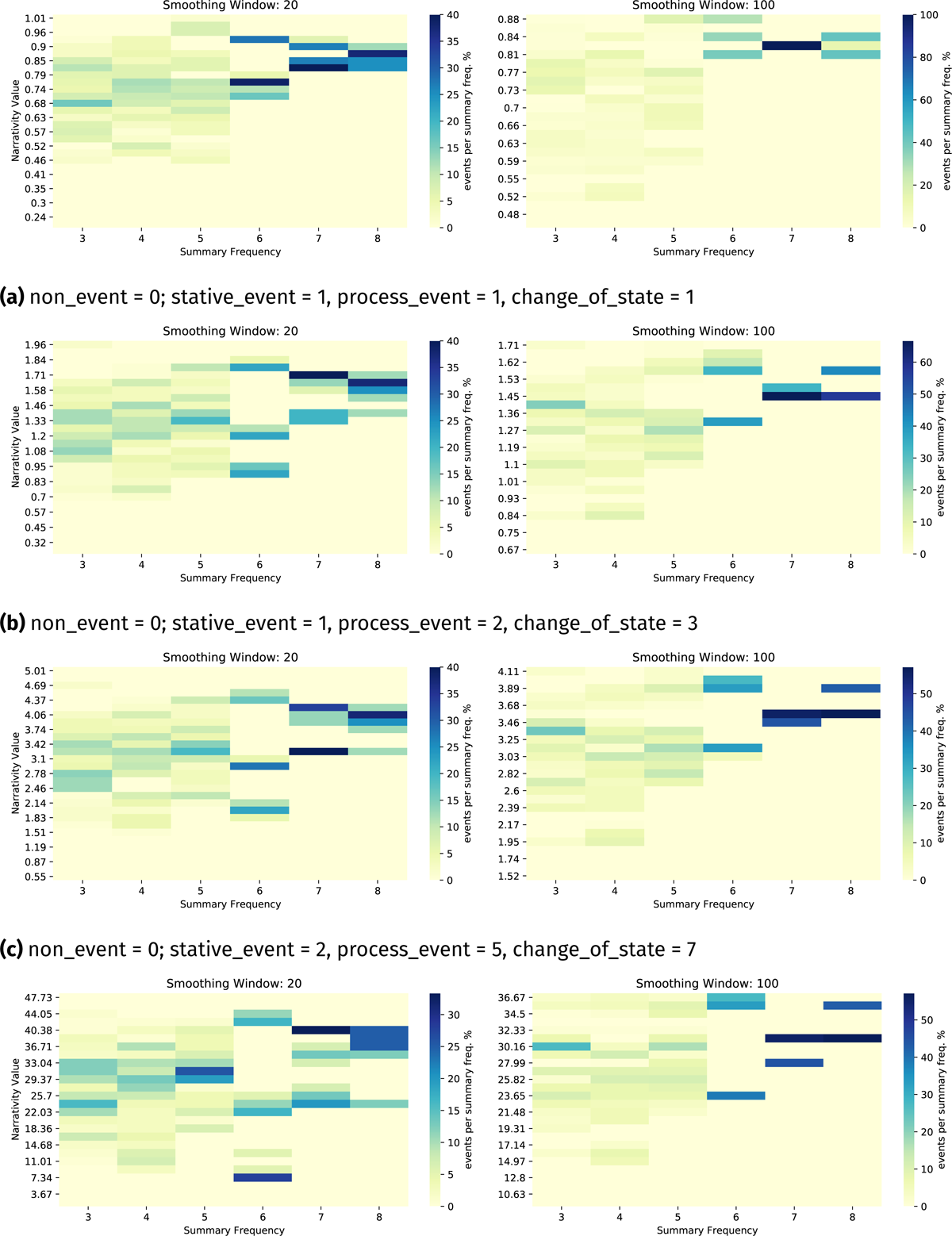

In Figure 6 a relation between the frequency of event mentions in different summaries and its narrativity becomes apparent. The heat maps show the relation of narrativity values and summary frequency for each constellation of smoothing window and narrativity value shown in Figure 5. Due to the changing event type scales, the narrativity values on the y axis differ from Figure 6a, to 6b, 6c and 6d. For all eight heat maps the same tendency is visible: The lower right area of the maps is light yellow, which means there are no events with low narrativity value. Also, the proportion of events with a higher narrativity rises the more times the events are mentioned, as the darker areas on the top right of the heat maps show. Generally all frequently mentioned events have a comparatively high narrativity value, with events mentioned in more than five summaries having a narrativity score higher than the overall average.

At the same time, the share of frequently mentioned events is comparatively small. This is relevant for our optimization. Because the proportion of frequently mentioned events is so small, they only have little effect on correlation measurements. Instead, the correlation is heavily affected by the predominant number of not frequently mentioned events. For this reason, no particularly high correlation between tellability and narrativity can be expected, especially, if we take all event annotations of a text into account. However, since we use correlation for optimization and not for evaluation, the correlation numbers can still be used for comparing the different settings.

4.2 Optimizing Smoothing

For the optimization of the smoothing windows, we used smoothing windows from 10 to 190 events for each of the four texts in combination with different narrativity scalings for the four event types. With respect to the scalings non events always were set at a narrative value of 0, while process events and changes of state were assigned a narrative value of at least 1 and at most 50. This results in the number of 1,750 permutations. The combination of those with the different smoothing windows (in steps of 20) leads to a total number of 105,000 constellations per text that have been compared.

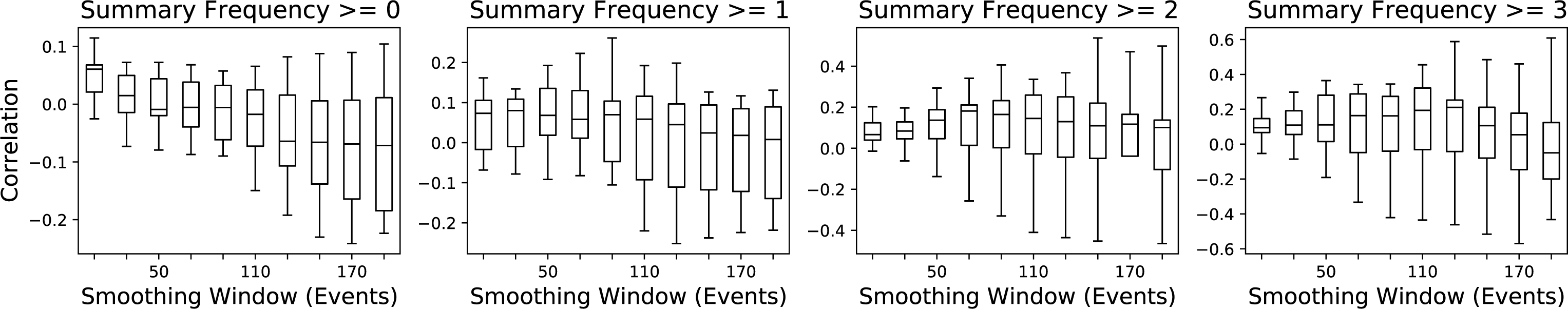

With regard to optimizing smoothing windows, the influence of the window size on the correlation of the narrativity and the tellability of an event is shown in Figure 7. The correlation values of the four texts for each smoothing window were combined into a single box plot. If all events of the texts are considered (Summary Frequency ≥ 0), the correlation lies roughly between -0.2 and 0.1 depending on the smoothing window. Again, these low correlation values are due to the fact that the Summary Frequency is 0 for the majority of the events (see Figure 4), whereas the narrative values of these events still vary and thus influence the correlation.

For this reason, we have used different filter settings for the box plot visualization. If only the events mentioned in at least one summary (Summary Frequency ≥ 1) are taken into account, the maximum correlation value rises above 0.2 with a smoothing window of 90 events. Correlation values increase even more if only the events mentioned in at least 2 or 3 summaries are included. In the first case, the maximum correlation is close to 0.5 and in the second case even 0.6. This confirms our assumption that single summaries would not have been sufficient resources for the optimization (see 4.1.1).

In all four subplots of Figure 7, we can observe that both the maximum correlation and the median of the correlation values of a smoothing window at a certain point decrease with an increasing size of the smoothing windows. Considering the median values of all four subplots, a first conclusion of this optimization procedure is therefore that smoothing windows of a size larger than 100 events are not useful.

As for the scattering of the correlation values, which we depict with individual box plots, it is important to note that it is not primarily caused by the fact that we summarize the constellations for four texts in one box plot. Instead, it is mainly caused the varied narrativity scaling.

4.3 Optimizing Event Type Values

For each of the 1,750 event value combinations and each smoothing window, we determined the average correlation of narrativity and tellability for the four texts. The five highest correlation values for these configurations are listed in Table 2. Here, we perform the same filtering as in Figure 7 and determine the highest correlation values considering the minimum summary frequency. This again results in increasing correlation values according to the filtering, i.e., more frequently mentioned events have a higher correlation.

Optimizing event type scaling. Maximum average correlation for the four manually annotated texts and the tested event type scalings.

| non_event | stative_event | process_event | change_of_state | smoothing window | correlation mean | |

| Summary Frequency ≥ 0 | 0 | 7 | 7 | 14 | 10 | 0.0597 |

| 0 | 7 | 7 | 13 | 10 | 0.0597 | |

| 0 | 6 | 6 | 10 | 10 | 0.0597 | |

| 0 | 11 | 11 | 18 | 10 | 0.0597 | |

| 0 | 10 | 10 | 16 | 10 | 0.0597 | |

| Summary Frequency ≥ 1 | 0 | 0 | 12 | 12 | 50 | 0.1124 |

| 0 | 0 | 18 | 18 | 50 | 0.1124 | |

| 0 | 0 | 19 | 19 | 50 | 0.1124 | |

| 0 | 0 | 10 | 10 | 50 | 0.1124 | |

| 0 | 0 | 11 | 11 | 50 | 0.1124 | |

| Summary Frequency ≥ 2 | 0 | 11 | 18 | 18 | 70 | 0.1356 |

| 0 | 9 | 15 | 15 | 70 | 0.1356 | |

| 0 | 12 | 20 | 20 | 70 | 0.1356 | |

| 0 | 10 | 17 | 17 | 70 | 0.1356 | |

| 0 | 3 | 5 | 5 | 70 | 0.1356 | |

| Summary Frequency ≥ 3 | 0 | 19 | 20 | 20 | 50 | 0.1623 |

| 0 | 18 | 19 | 19 | 50 | 0.1623 | |

| 0 | 17 | 18 | 18 | 50 | 0.1623 | |

| 0 | 11 | 12 | 12 | 50 | 0.1622 | |

| 0 | 7 | 7 | 7 | 50 | 0.1622 | |

More interesting for our purposes, however, are the scaling trends in the four sections of Table 2 corresponding to the four tellability values (i.e., the Summary Frequencies ≥ 0, 1, 2, 3). Here, the best constellations show differences with regard to the narrativity value scaling that seem to be related to the tellability values. For constellations where all events are considered (Summary Frequency ≥ 0), there is no difference in scaling between stative events and process events. A similar situation applies to events mentioned in at least three summaries (see the fourth section of Table 2). In these two sections, stative events and process events have (almost) the same narrative values. The opposite is true for the second section (Summary Frequency ≥ 1) and, with some reductions, also for the third section (Summary Frequency ≥ 2), where process events have 1.66 times the narrativity value of stative events.

The maximum average correlation values in Table 2 are lower than the maximum correlation values in the box plots of Figure 7 because in the latter a different configuration of event type scaling causes the highest correlation values for the individual texts. There, the correlation values for every text have been taken into account, whereas the values in Table 2 are averaged values comprising all four texts. This results in high correlation values for the four texts. However, none of the correlation values for the four tellability values in Table 2 is significantly below the median values of the box plot evaluation in Figure 7. Also, this cross-text optimization can be regarded more adequate since our approach to plot modeling is intended for the analysis of larger automatically annotated corpora.

However, it is debatable whether one should rather follow the scaling in the first and fourth sections or the scaling in the third and fourth sections. We consider the latter more conclusive. The first section takes all events into account and thus, as we have explained above, the resulting correlation values are not very meaningful. In contrast, the correlation values in the fourth section have a limited significance because they are based on a comparatively small number of events. Here we refer again to the histograms in Figure 4 that show the number of events with regard of their mentions in summaries.

5. Conclusion

We have presented an approach to plot that is based on the representation of events and narrativity as conceived of in narrative theory. With this, we add an – up to now little explored – aspect to the computational analysis of events, narrativity and plot, namely their discourse-oriented operationalization. This focus on the representation of events allows us to leave aside story-related issues to a great extent. Thus, we avoid problems typically arising when analyzing plot with regard to story where reader related information is needed or, alternatively, a rather complex analysis is not possible yet and needs to be approximated by instrumental variables.

We have shown how the establishment of narrativity graphs can build on narrative theory event concepts including scalar narrativity and how this can be related to the modeling of plot.

The parametrization of the narrativity graphs has been optimized with regard to the tellability of events assessed in readers’ summaries of narratives. For our optimization goal, the considerations and measurements we have presented when discussing Figure 7 and Table 2 yield two main results:

The smoothing windows should include between 50 and 100 events. This is indicated by the box plot evaluations and confirmed by the best configurations in Table 2.

For scaling, a clear weighting gradient of non events and stative events on the one hand and process events and changes of state on the other hand is important.

The outcome of this work is a heuristic that is firmly rooted in narrative theory and can be implemented for the analysis of narratives. Since we have operationalized a scalar notion of narrativity we can, in terms of the narrativity notions in Abbott (2014) discussed above, use it for the analysis of narrativity as a variable as well as a mode of and within narrative texts. Even more so, since our approach has proven to be automatable to a satisfactory extent.5

In addition to the development of an approach for analyzing the narrativity of texts, this contribution shows how theoretical concepts and their computational implementation can be closely connected. With regard to the concept of events and narrativity, the scalar operationalization of events based on their narrativity together with our optimization efforts have shown the plausibility of the underlying assumptions from narrative theory. Such connections between theory and implementation are a so far little considered aspect of computational literary studies that should be emphasized more.

6. Data availability

Data can be found here: https://github.com/forTEXT/EvENT_Dataset

7. Acknowledgements

We would like to thank our student annotators who have annotated tens of thousands of events, Gina-Maria Sachse, Michael Weiland and Angela Nöll, as well as our students in the course ‘Literarische Konflikte’ during the winter term 2021/2022 at Technical University of Darmstadt for the writing of the summaries of the analyzed texts and Gina-Maria Sachse for the annotation of the summaries.

This work has been funded by the German research funding organization DFG (grant number GI 1105/3-1).

8. Author contributions

Evelyn Gius: Conceptualization, Formal Analysis, Writing – original draft, Writing – review & editing

Michael Vauth: Conceptualization, Formal Analysis, Writing – original draft, Writing – review & editing

Notes

- For a demo cf. https://narrativity.ltdemos.informatik.uni-hamburg.de/. [^]

- For a more comprehensive overview of the variety of terms and differences in scope cf. Schmid (2008, p. 241), Martínez and Scheffel (2016, p. 26) and Lahn and Meister (2013, p. 215). [^]

- Cf. Vauth and Gius (2021) for a detailed annotation guideline. [^]

- We also use additional properties derived from the criteria in Table 1 and additionally determine whether events are irreversible, intentional, unpredictable, persistent, mental or iterative (cf. Vauth and Gius (2021) for the comprehensive description of the annotation categories and tagging routines). This is not discussed here since it is directed towards event II detection and integration of (more) story world knowledge in possible further steps and thus beyond the scope of this contribution. [^]

- We have reached an F1 Score of 0.71 for the event classification on unseen texts (cf. Vauth et al. (2021) which results in a correlation of narrativity graphs typically reaching between 0.8 and 0.9). [^]

References

1 Abbott, H. Porter (2014). “Narrativity”. In: the living handbook of narratology. Ed. by Peter Hühn, John Pier, Wolf Schmid, and Jörg Schönert. University of Hamburg. URL: https://www-archiv.fdm.uni-hamburg.de/lhn/node/27.html (visited on 10/18/2022).

2 Arnold, Heinz Ludwig, ed. (2020). Kindlers Literatur Lexikon (KLL). J.B. Metzler. DOI: http://doi.org/10.1007/978-3-476-05728-0.

3 Bal, Mieke (1985). Narratology. Introduction to the Theory of Narrative. Trans. by Christine van Boheemen. Toronto Univ. Press.

4 Bamman, David (2021). BookNLP. A natural language processing pipeline for books. URL: https://github.com/booknlp/booknlp (visited on 11/10/2021).

5 Baroni, Raphaël (2012). “Tellability”. In: the living handbook of narratology. Ed. by Peter Hühn, John Pier, Wolf Schmid, and Jörg Schönert. University of Hamburg. URL: https://www-archiv.fdm.uni-hamburg.de/lhn/node/30.html (visited on 10/18/2022).

6 Boyd, Ryan L., Kate G. Blackburn, and James W. Pennebaker (2020). “The narrative arc: Revealing core narrative structures through text analysis”. In: Science Advances 6 (32). DOI: http://doi.org/10.1126/sciadv.aba2196.

7 Chihaia, Matei (2021). “Sinaloa in der ZEIT. Computergestützte Analyse von Ereignishaftigkeit und Erzählwürdigkeit in einem Korpus journalistischer Erzählungen”. In: DIEGESIS 10 (1). URL: https://www.diegesis.uni-wuppertal.de/index.php/diegesis/article/view/425 (visited on 10/27/2022).

8 Doddington, George R., Alexis Mitchell, Mark A. Przybocki, Lance A. Ramshaw, Stephanie M. Strassel, and Ralph M. Weischedel (2004). “The automatic content extraction (ACE) program-tasks, data, and evaluation”. In: Proceedings of the Fourth International Conference on Language Resources and Evaluation (LREC’04). European Language Resources Association (ELRA), pp. 837–840.

9 Genette, Gérard (1980). Narrative discourse: an essay in method. Cornell University Press.

10 Herman, David (2005). “Events and Event-Types”. In: The Routledge Encyclopedia of Narrative Theory. Routledge, pp. 151–152.

11 Hühn, Peter (2013). “Event and Eventfulness”. In: the living handbook of narratology. Ed. by Peter Hühn, John Pier, Wolf Schmid, and Jörg Schönert. University of Hamburg. URL: https://www-archiv.fdm.uni-hamburg.de/lhn/node/39.html (visited on 10/18/2022).

12 Jockers, Matthew (2015). Revealing Sentiment and Plot Arcs with the Syuzhet Package. URL: http://www.matthewjockers.net/2015/02/02/syuzhet/ (visited on 10/27/2022).

13 Kukkonen, Karin (Mar. 2014). “Plot”. In: the living handbook of narratology. Ed. by Peter Hühn, John Pier, Wolf Schmid, and Jörg Schönert. Hamburg University Press. URL: https://www-archiv.fdm.uni-hamburg.de/lhn/node/115.html (visited on 10/18/2022).

14 Lahn, Silke and Jan Christoph Meister (2013). Einführung in die Erzähltextanalyse. Metzler.

15 Lotman, Jurij (1977). The Structure of the Artistic Text. Dept. of Slavic Languages and Literature, University of Michigan.

16 Martínez, Matías and Michael Scheffel (2016). Einführung in die Erzähltheorie. 10th ed. C.H. Beck.

17 Piper, Andrew, Richard Jean So, and David Bamman (2021). “Narrative Theory for Computational Narrative Understanding”. In: Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing (EMNLP). Association for Computational Linguistics, pp. 298–311. DOI: http://doi.org/10.18653/v1/2021.emnlp-main.26.

18 Prince, Gerald (2010). A grammar of stories: An introduction. Mouton.

19 Rimmon-Kenan, Shlomith (1983). Narrative Fiction. Contemporary Poetics. Routledge.

20 Ryan, Marie-Laure (1986). “Embedded Narratives and Tellability”. In: Style 20 (3), pp. 319–340.

21 Ryan, Marie-Laure (1991). Possible worlds, artificial intelligence, and narrative theory. Indiana University Press.

22 Schmid, Wolf (2008). Elemente der Narratologie. 2nd ed. De Gruyter.

23 Sims, Matthew, Jong Ho Park, and David Bamman (2019). “Literary Event Detection”. In: Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics. Association for Computational Linguistics, pp. 3623–3634. DOI: http://doi.org/10.18653/v1/P19-1353.

24 Stierle, Karlheinz (1973). “Geschehen, Geschichte, Text der Geschichte”. In: Geschichte – Ereignis und Erzählung. Ed. by Reinhart Koselleck and Wolf-Dieter Stempel. Fink, pp. 530–534.

25 Tomasevskij, Boris (1971). Teorija literatury. Poetika. Rarity reprints. Bradda Books.

26 Vauth, Michael and Evelyn Gius (2021). Richtlinien für die Annotation narratologischer Ereigniskonzepte. DOI: http://doi.org/10.5281/zenodo.5078174.

27 Vauth, Michael, Hans Ole Hatzel, Evelyn Gius, and Chris Biemann (2021). “Automated Event Annotation in Literary Texts”. In: CHR 2021: Computational Humanities Research Conference, pp. 333–345. URL: http://ceur-ws.org/Vol-2989/short_paper18.pdf (visited on 10/18/2022).

28 Walker, Christopher, Stephanie Strassel, Julie Medero, and Kazuaki Maeda (2006). ACE 2005 Multilingual Training Corpus. Linguistic Data Consortium. DOI: http://doi.org/10.35111/mwxcvh88.